Introduction on Graph_runner with Vitis AI 1.4

Overview

We know that it often takes time from getting a well-trained model to a successful deployment on AI inference hardware. The same situation is true for the deployment on FPGA with Vitis AI. One of the practical factors is that real-world neural network models are very different in the design of structures or operators. This leads to the problem that different parts of the same network model cannot be fully supported by the acceleration engine. Usually, we split the model into multiple subgraphs for DPU and CPU processors, however, the users need to deploy the subgraphs one by one. This manual deployment flow increases the difficulty of development on FPGA.

In the recent Vitis AI 1.4 release, Xilinx has introduced a completed new set of software API Graph Runner. It is designed to convert the models into a single graph and makes the deployment easier for multiple subgraph models. Under the current Vitis AI framework,

it still takes three steps to deploy a model of Tensorflow, PyTorch, or Caffe on an FPGA or ACAP platform.

Step 1, Quantize the model, i.e. convert float format into int8 format.

Step 2, Compile the quantized model into a XIR graph, which is a universal representation of the model as a computational graph.

Step 3, Invoke VART (Vitis AI Runtime) APIs to run the XIR graph

This article focuses on how to call the VART APIs to run the XIR graph and assume we already have a XIR graph in the handle, and see how to create a runner and get started with Graph_runner in the compilation process. For quantization and compilation, please refer to Vitis AI Tools for more information.

Quick Introduction on XIR graph

An XIR graph is a simple representation of a computational DAG (Directed Acyclic Graph) whereas nodes are operations, i.e. XIR Op e.g. Conv2d, Reshape, Concatenation etc. An XIR Op has only one XIR Tensor as output and one or many XIR Tensor as inputs. The Xcompiler (see Vitis AI Compiler) marks some XIR Ops as the graph inputs and some XIR Ops as the graph outputs.

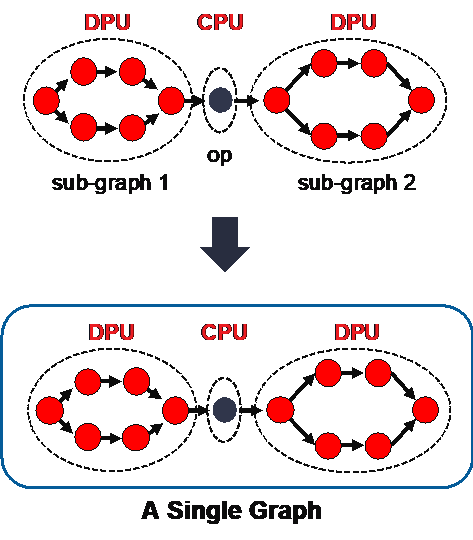

An XIR graph is segmented into many subgraphs, and each subgraph has a corresponding runner to run the subgraph on a specific device, e.g. "DPU", "CPU" or others. Without graph runner APIs, the end-user has to create these runners and manually connect inputs and outputs between the runners. This process is error-prone. With graph runner APIs, the end-user only needs to take care of the inputs and outputs of the whole graph.

For ease of use, Xilinx delicately designed the graph runner APIs as same as subgraph runner APIs. They share the same API interface, i.e. vart::Runner or vart::RunnerExt which is an extension of vart::Runner.

An example

Let's take resnet50.xmodel as an example to show how to deploy the model on a target board. It includes the following steps:

- Load the xmodel file

- Create a graph runner

- Fill in inputs

- Start the runner

- Post process the output

To load a xmodel file, we can use XIR API xir::Graph::deserialize. (see XIR)

auto g_xmodel_file = std::string("/usr/share/vitis_ai_library/models/resnet50/resnet50.xmodel")

auto graph = xir::Graph::deserialize(g_xmodel_file);

To create a runner, we can use one of Vitis-AI-Library API, vitis::ai::GraphRunner::create_graph_runner (see Vitis AI Library)

auto attrs = xir::Attrs::create();

auto runner =

vitis::ai::GraphRunner::create_graph_runner(graph.get(), attrs.get());

attrs is not in use yet.

To fill in inputs, we need to use VART API, vart::RunnerExt::get_inputs() , (see VART), it returns the input tensor buffers associated with the output XIR Ops marked by Xcompiler as mentioned above.

std::vector<vart::TensorBuffer*> inputs = runner->get_inputs();

For resnet50.xmodel, we have only one input tensor buffers.

auto tensor_buffer= inputs[0];

auto batch_size = inputs[0]->get_tensor()->get_shape()[0];

uint64_t data;

size_t data;

for(auto batch = 0; batch < batch_size; ++ batch) {

std::tie(data, size) = tensor_buffer->data({batch, 0, 0, 0});

// read input from file

...

}

An input tensor buffer has many continuous memory regions, and one region for one input image in the batch so that we have to read the image and fill in the input one by one.

If the number of input images is less than the batch size, we have to construct a new tensor buffer that has a smaller batch size. It is not recommended because DPU resource is not fully utilized.

We can read data from a input file as below.

std::ifstream(filename).read((char*)data, size).good()

We can start the graph runner as below.

//sync input tensor buffers

for (auto& input : inputs) {

input->sync_for_write(0, input->get_tensor()->get_data_size() /

input->get_tensor()->get_shape()[0]);

}

//run graph runner

auto v = runner->execute_async(inputs, outputs);

auto status = runner->wait((int)v.first, -1);

CHECK_EQ(status, 0) << "failed to run the graph";

//sync output tensor buffers

for (auto output : outputs) {

output->sync_for_read(0, output->get_tensor()->get_data_size() /

output->get_tensor()->get_shape()[0]);

}

It is important to synchronize buffers i.e. before and after execute_async because potentially it is possible to support preprocessing and postprocessing by HW with zero-copy so that synchronization becomes unnecessary in such case.

Similar to fill in input tensor buffers, we can read and process the output tensor buffers as below.

auto tensor_buffer= outputs[0];

auto batch_size = outputs[0]->get_tensor()->get_shape()[0];

uint64_t data;

size_t data;

for(auto batch = 0; batch < batch_size; ++ batch) {

std::tie(data, size) = tensor_buffer->data({batch, 0, 0, 0});

std::ofstream(filename).write((char*)data, size).good();

}

Note that output tensor buffers are not ordered, we need to be careful to find the proper output tensor buffers by name, i.e.tensor_buffer->get_tensor()->get_name()

Conclusion

With the introduction above, we know that the Graph Runner converts the model into a single graph and makes the deployment easier especially for models with multiple subgraphs. In the past, the user will need to create DPU runner for each subgraph, then implement CPU Ops with a lot of manual inputs and outputs connection from DPU and CPU. Now, Graph_runner takes all as one graph, which makes the Vitis AI deployment much easier.

If you need more information, please contact bingqing@xilinx.com

About Bingqing Guo

Bingqing Guo, SW & AI Product Marketing Manager at CPG AMD. Bingqing has been working in the marketing of AI acceleration solutions for years. With her understanding of the market and effective promotion strategies, more users have begun to use AMD Vitis AI in their product development and recognized the improvements that Vitis AI has brought to their performance.

See all Bingqing Guo's articles

About Chunye Wang

Chunye Wang, Principal Software Engineer at CPG AMD. Chunye has been working in software development for 15+ years, now he is leading the Vitis AI software API development. As a key software developer, Chunye has made many contributions to improving the ease of use of Vits AI and has always been passionate about software development.

See all Chunye Wang's articles